Note: This post is a bit long, but it’s the story behind the evolution of our project to teach data analytics and data science to a teenager, leveraging her love of ice hockey.

There are a few times during my career where I have made decisions, that were in retrospect, a lot better for my family than I initially thought. At the time I made the decision, I did weigh the impact of the decision on my family, but there have been 2 that were really the best things that could have happened. The first was back in 2011 when I quit my job. My younger daughter was struggling in school and having the time and flexibility to get her the help she needed would have been extremely difficult if I had been working the schedule I had been. The second happened recently. In March I left another job to join my husband in the full-time running of our business. Since March, I’ve been able to spend one-on-one time with each of my daughters, taking separate spring break trips. And more importantly (at least for this post), I was able to work with Cayla, our 16 year old, during her summer internship project.

This story begins when we decided in the spring that we were going to hire Cayla as an intern in Digital Ambit, our software and data integration consulting business. At the time we knew we wanted to use this time productively, specifically we hoped to teach Cayla some technical skills. The most obvious route would have been to have Carson teach her programming. However, Carson was more than 100% utilized in our consulting business, where I had a bit more available time working on the business. We needed to be able to get Cayla some tech skills, without severely impacting Carson’s ability to deliver on our billable work. This left me to figure something out.

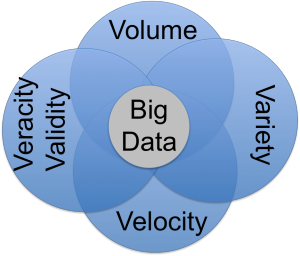

My background is fairly diverse, with time spent in both technical and software skills. I consider myself a technical project manager, truly leveraging my technical skills to manage customers and projects. While I can manage any technical project or implementation, my actual technical experience focuses on databases and data management. I had recently taken some data science Coursera courses and had dipped my toe in the R world. I finally decided Cayla was going to do a data analytics/science project to take hockey statistics and see if she could predict who will win next year’s Stanley Cup.

I bought Cayla a couple of books on data science for business and practical data science with R. I knew Cayla had never studied statistics and had a few concerns about the complexity of resources written about data science and R. I made Cayla write a blog to make sure she could articulate the material she was learning. Once she started picking up some of the basics, we talked through the project at a very high level so Cayla knew what the next steps were. This was very much a hands on project for her. She had to find the data, download the data, cleanse the data, and figure out the R syntax to load and analyze the data. I gave her space to work through issues, especially after the first few times she told me she had an issue with R and after asking if she had confirmed the syntax, pointing out the missing comma or syntax error.

We were about a month into the project before Cayla could bring all the pieces together and really explain what she was trying to do. She could relate the daily work to the project, and had mapped out her next steps to align to her business question (“Who will win the next Stanley Cup?”). At this same time, we learned we had been accepted to present this story to the DC Web Women Code(Her) conference. This intensified the pressure, and added a hard deadline of September 12, 2015.

This is where it got a bit difficult for Cayla. At this point she had gotten all the data she thought she needed, cleansed it (or so she thought), and had found at least one way to calculate the historical means, and populate the 2015-2016 statistics. The complex nature of the statistical models and the applicable documentation caused this to be a real sticking point for Cayla. Unfortunately, the method she had been using, along with her still dirty data made reproducibility and data modeling extremely difficult.

At this point, I stepped in to help in a more hands on way. I knew that I wanted to create an R script to share during our presentation, so I started walking through Cayla’s syntax. Sometimes things that work in isolation, don’t work the best when combined with the other methods you applied. It took some intense focus to step through the process, cleanse the data to acceptable R processing standards, and leveraging different syntax for historical means and filling gaps. The hardest part was finding clear, concise examples of people who had done this before. Ultimately, I was able to find syntax that worked to run models against the data and analyze the data. We were not successful in getting the model to predict any winners.

I think Cayla and I both learned a lot from this project. Cayla learned that she can do really hard things, she’s never done before. Cayla also learned about planning and organizing data projects, and how truly difficult, but incredibly important, it is to clean your data. I learned that Cayla can learn anything with the right incentive, or within the framework of something that interests her. I also learned that in data analytics and science, more people need to publish their work in simpler forms. Please don’t assume everyone has a PHD.

We presented our story at the Code(Her) conference on Saturday. Cayla reinforced her knowledge of data science during the Intro to Machine Learning session, and seemed to have fun learning agile principles while playing games. The day culminated with us presenting to a room full of women. It was really rewarding to see how well Cayla did, and to see how many wanted to hear us.

To see our detailed presentation and additional materials, visit my github page.

Merriam-Webster defines something as

Merriam-Webster defines something as